-

Choose Which Cisco OS to Boot

Let’s have a look at what Cisco OS gets booted at startup and how we can influence that deciscion using the configuration register.

Read more… -

Quality of Service (QoS)

Let’s have a look at QoS or how to unfairly manage traffic in the face of congestion on Cisco devices.1

Read more… -

OSPF

What follows is basically a summary of what I have learned about OSPF during my CCNA studies.

Read more… -

Convert Cisco Lightweight AP to Autonomous

If you have a Cisco AP that has CAP in the model, you have a so-called lightweight AP that is supposed to be controlled by a wireless controller (WLC). I was able to convert my AIR-CAP2602E-E-K9 to autonomous mode by loading different firmware. This post discusses how to do that.

Read more… -

Cisco LAN Routing

Let’s look at several ways to route between VLANs in the Cisco world.

Read more… -

EtherChannel

Let’s have a look at how we can use redundant layer-2 links between 2 Cisco devices.

Read more… -

Run SpinRite on Librem 5 Internal Storage

I have a Purism Librem 5 Linux phone. The Evergreen batch has 32 GiB of flash storage. In this post I will show how to run SpinRite on that drive.

Read more… -

Convert Linux into NAT Router

Sometimes it comes in handy to have a Linux box act as a router. You might not have a switch lying around. You might want to monitor all traffic coming and going to some device. In this post, we will set up Linux to act as a simple IPv4 NAT router.

Read more… -

Create Server Backup from Local Machine with Borg

How to back up your servers with borg? One way to do it, as documented by the fine borg documentation, is to use a pull backup using sshfs. The idea is to mount the remote filesystem in a local directory, chroot into it, and run borg inside the chroot.

Read more… -

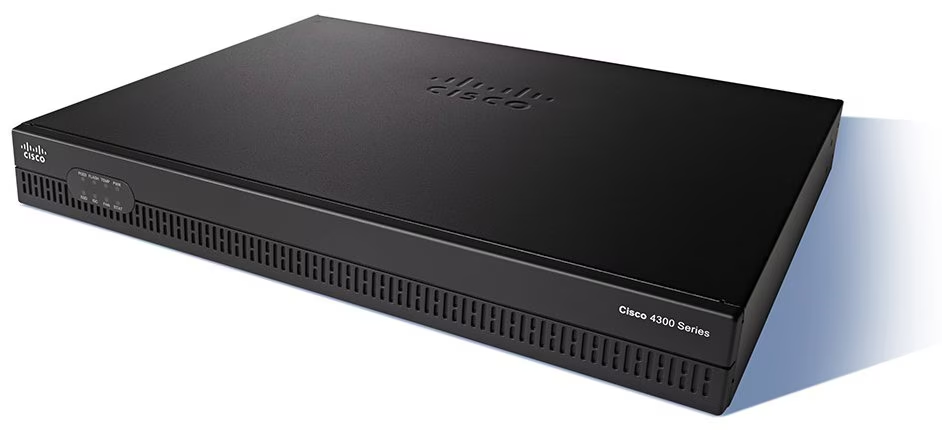

Updating My Cisco 4321 Router's IOS over HTTP, FTP and TFTP and More

I recently got my hands on a second-hand Cisco 4321 router. First things first, I want to upgrade to the latest IOS version so I have the latest security patches. Having no experience at all with IOS, these are some things I learned along the way.

Read more…